This is a truly bizarre report.

A woman named BT suffered an accident when she was 20 years old and she became blind. Thirteen year later she was referred to Bruno Waldvogel (one of the two authors of the paper) for psychotherapy by a psychiatry clinic who diagnosed her with dissociative identity disorder, formerly known as multiple personality disorder.

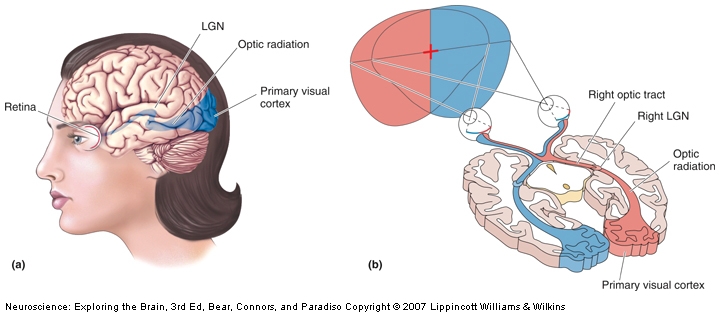

The cortical blindness diagnosis has been established after extensive ophtalmologic tests in which she appeared blind but not because of damage to the eyes. So, by inference, it had to be damage to the brain. Remarkably (we shall see later why), she had no oculomotor reflexes in response to glare. Moreover, visual evoked potentials (VEP is an EEG in the occipital region) showed no activity in the primary visual area of the brain (V1).

During the four years of psychotherapy, BT showed more than 10 distinct personalities. One of them, a teenage male, started to see words on a magazine and pretty soon could see everything. With the help of hypnotherapeutic techniques, more and more personalities started to see.

“Sighted and blind states could alternate within seconds” (Strasburger & Waldvogel, 2015).

The VEP showed no or very little activity when the blind personality was “on” and showed normal activity when the sighted personality was “on”. Which is extremely curious, because similar studies in people with psychogenic blindness or anesthetized showed intact VEPs.

There are a couple of conclusions from this: 1) BT was misdiagnosed, as is unlikely to be any brain damage because some personalities could see, and 2) Multiple personalities – or dissociate identities, as they are now called – are real in the sense that they can be separated at the biological level.

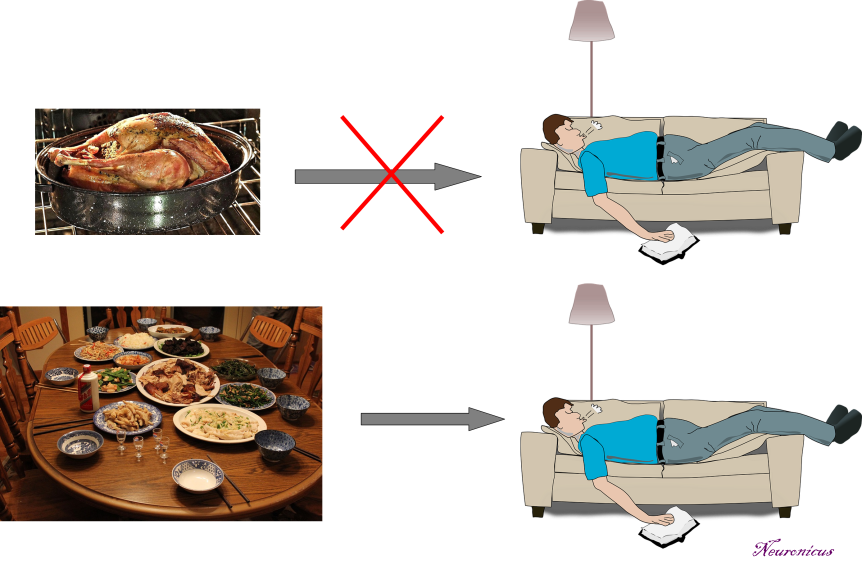

Fascinating! The next question is, obviously, what’s the mechanism behind this? The authors say that it’s very likely the LGN (the lateral geniculate nucleus of the thalamus) which is the only relay between retina and V1 (see pic). It can be. Surely is possible. Unfortunately, so are other putative mechanisms, as 10% of the neurons in the retina also go to the superior colliculus, and some others go directly to the hypothalamus, completely bypassing the thalamus. Also, because it is impossible to have a precise timing on the switching between personalities, even if you MRI the woman it would be difficult to establish if the switching to blindness mode is the result of a bottom-up or a top-down modulation (i.e. the visual information never reaches V1, it reaches V1 and is suppressed there, or some signal form other brain areas inhibits V1 completely, so is unresponsive when the visual information arrives).

Despite the limitations, I would certainly try to get the woman into an fMRI. C’mon, people, this is an extraordinary subject and if she gave permission for the case study report, surely she would not object to the scanning.

Reference: Strasburger H & Waldvogel B (Epub 15 Oct 2015). Sight and blindness in the same person: Gating in the visual system. PsyCh Journal. doi: 10.1002/pchj.109. Article | FULLTEXT PDF | Washington Post cover

By Neuronicus, 29 November 2015