This is short and sweet: Don’t Look Up Illustrates 5 Myths That Fuel Rejection of Science (Scientific American)

Category: Behavior

Social groups are not random

I re-blog other people’s posts extremely rarely. But this one is worth it. It’s about how groups form based on the amount of information given. And, crucially, how amount of information can change individual behavior and group splits. It relates to political polarization and echo-chambers. Read it.

After you read it, you will understand my following question: I wonder by how much the k would increase in a non-binary environment, say the participants are given 3 colors instead of 2. The authors argue that there is a k threshold after which the amount of information makes no difference any more. But that is because the groups already completed the binary task, therefore more information is useless due to the ceiling effect. Basically, my question is: at which point more information stops making a difference in behavior if there were more choices? Is it a log scale, linear, exponential? Good paper, good cover by CNRS, at scienceblog.

By Neuronicus, 24 February 2021

P.S. I haven’t written in a while due to many reasons, one of which is this pesky WordPress changed the post Editor and frankly I don’t have the time and patience to figure it out right now. But I’ll be back :).

Chloroquine-induced psychosis

In the past few days, a new hot subject has gripped the attention of various media and concerned the medical doctors, as if they don’t have enough to deal with: chloroquine. That is because the President of the U.S.A., Donald Trump, endorsed chloroquine as treatment of COVID-19, a “game changer”, despite his very own director of the National Institute of Allergy and Infectious Diseases (NIAID), Dr. Anthony Fauci, very emphatically and vehemently denying that the promise of (hydroxy)chloroquine is beyond anecdotal (see the White House briefing transcript here).

Many medical doctors spoke out urging caution against the drug, particularly against the combination the President endorses: hydroxychloroquine + azithromycin. As I understand it, this combo can be lethal as it can lead to fatal arrhythmia.

As for the (hydroxy)cloroquine’s possibility to help treat COVID-19, the jury is still out. Far out. Meaning that there have been a few interesting observations of the drugs working in a Petri dish (Liu et al. 2020, Wang et al., 2020), but as any pharma company knows, there is a long and perilous way from Petri dishes to pharmacies. To be precise, only 1 in 5000 drugs get from pre-clinical trials to approval and it takes about 12 years for this process to be completed (Kaljevic et al., 2004). The time is so long not because red tape, as some would deplore, but because it takes time to see what it does in humans (Phase 0), what doses are safe and don’t kill you (Phase 1), does it work at all for the intended disease (Phase 2), compare it with other drugs and evaluate the long-term side effects (Phase 3) and, finally, to see the risks and benefits of this drug (Phase 4). While we could probably get rid of Phase 0 and 4 when there is such a pandemic, there is no way I would submit my family to anything that hasn’t passed phases 1, 2, and 3. And those take years. With all the money that a nation-state has, it would still take 18 months to do it semi-properly.

Luckily for all of us, chloroquine is a very old and established anti-malarial medicine, and as such we can safely dispense of Phases 0, 1, and 4, which is fine. So we can start Phase 2 with (hydroxy)chloroquine. And that is exactly what WHO and several others are doing right now. But we don’t have enough data. We haven’t done it yet. So one can hope as much as they want, but that doesn’t make it faster.

Unfortunately – and here we go to the crux of the post -, following the President’s endorsement, many started to hoard chloroquine. Particularly the rich who can afford to “convince” an MD to write them a script for it. In countries where chloroquine is sold without prescription, like Nigeria, where it is used for arthritis, people rushed to clear the pharmacies and some didn’t just stockpiled it, but they took it without reason and without knowing the dosage. And they died. [EDIT, 23 March 2020. If you think that wouldn’t ever happen in the land of the brave, think again, as the first death to irresponsible taking chloroquine just happened in the USA]. In addition, the chloroquine hoarding in US by those who can afford it (is about $200 for 50 pills) lead to lack of supply for those who really need it, like lupus or rheumatology patients.

For those who blindly hoard or take chloroquine without prescription, I have a little morsel of knowledge to impart. Remember I am not an MD; I hold a PhD in neuroscience. So I’ll tell you what my field knows about chloroquine.

Both chloroquine and hydroxychloroquine can cause severe psychosis.

That’s right. More than 7.1 % of people who took chloroquine as prophylaxis or for treatment of malaria developed “mental and neurological manifestations” (Bitta et al., 2017). “Hydroxychloroquine was associated with the highest prevalence of mental neurological manifestations” (p. 12). The phenomenon is well-reported, actually having its own syndrome name: “chloroquine-induced psychosis”. It was observed more than 50 years ago, in 1962 (Mustakallio et al., 1962). The mechanisms are unclear, with several hypotheses being put forward, like the drugs disrupting the NMDA transmission, calcium homeostasis, vacuole exocytosis or some other mysterious immune or transport-related mechanism. Because the symptoms are so acute, so persistent and so diverse than more than one brain neurotransmitter system must be affected.

Chloroquine-induced psychosis has sudden onset, within 1-2 days of ingestion. The syndrome presents with paranoid ideation, persecutory delusions, hallucinations, fear, confusion, delirium, altered mood, personality changes, irritability, insomnia, suicidal ideation, and violence (Biswas et al., 2014, Mascolo et al., 2018). All these at moderately low or therapeutically recommended doses (Good et al., 1982). One or two pills can be lethal in toddlers (Smith & Klein-Schwartz, 2005). The symptoms persist long after the drug ingestion has stopped (Maxwell et al., 2015).

Still want to take it “just in case”?

P.S. A clarification: the chemical difference between hydroxychloroquine and chloroquine is only one hydroxyl group (OH). Both are antimalarial and both have been tested in vitro for COVID-19. There are slight differences between them in terms of toxicity, safety and even mechanisms, but for the intents of this post I have treated them as one drug, since both produce psychosis.

REFERENCES:

1) Biswas PS, Sen D, & Majumdar R. (2014, Epub 28 Nov 2013). Psychosis following chloroquine ingestion: a 10-year comparative study from a malaria-hyperendemic district of India. General Hospital Psychiatry, 36(2): 181–186. doi: 10.1016/j.genhosppsych.2013.07.012, PMID: 24290896 ARTICLE

2) Bitta MA, Kariuki SM, Mwita C, Gwer S, Mwai L, & Newton CRJC (2 Jun 2017). Antimalarial drugs and the prevalence of mental and neurological manifestations: A systematic review and meta-analysis. Version 2. Wellcome Open Research, 2(13): 1-20. PMCID: PMC5473418, PMID: 28630942, doi: 10.12688/wellcomeopenres.10658.2 ARTICLE|FREE FULLTEXT PDF

4) Good MI & Shader RI. Lethality and behavioral side effects of chloroquine (1982). Journal of Clinical Psychopharmacology, 2(1): 40–47. doi: 10.1097/00004714-198202000-00005, PMID: 7040501. ARTICLE

3) Kraljevic S, Stambrook PJ, & Pavelic K (Sep 2004). Accelerating drug discovery. EMBO Reports, 5(9): 837–842. doi: 10.1038/sj.embor.7400236, PMID: 15470377, PMCID: PMC1299137. ARTICLE| FREE FULLTEXT PDF

4) Mascolo A, Berrino PM, Gareri P, Castagna A, Capuano A, Manzo C, & Berrino L. (Oct 2018, Epub 9 Jun 2018). Neuropsychiatric clinical manifestations in elderly patients treated with hydroxychloroquine: a review article. Inflammopharmacology, 26(5): 1141-1149. doi: 10.1007/s10787-018-0498-5, PMID: 29948492. ARTICLE

5) Maxwell NM, Nevin RL, Stahl S, Block J, Shugarts S, Wu AH, Dominy S, Solano-Blanco MA, Kappelman-Culver S, Lee-Messer C, Maldonado J, & Maxwell AJ (Jun 2015, Epub 9 Apr 2015). Prolonged neuropsychiatric effects following management of chloroquine intoxication with psychotropic polypharmacy. Clinical Case Reports, 3(6): 379-87. doi: 10.1002/ccr3.238, PMID: 26185633. ARTICLE | FREE FULLTEXT PDF

6) Mustakallio KK, Putkonen T, & Pihkanen TA (1962 Dec 29). Chloroquine psychosis? Lancet, 2(7270): 1387-1388. doi: 10.1016/s0140-6736(62)91067-x, PMID: 13936884. ARTICLE

7) Smith ER & Klein-Schwartz WJ (May 2005). Are 1-2 dangerous? Chloroquine and hydroxychloroquine exposure in toddlers. The Journal of Emergency Medicine, 28(4): 437-443. doi: 10.1016/j.jemermed.2004.12.011, PMID: 15837026. ARTICLE

Studies about chloroquine and hydoxychloroquine on SARS-Cov2 in vitro:

- Liu, J., Cao, R., Xu, M., Wang, X., Zhang, H., Li, Y., Hu, Z., Zhong, W., & Wang, M. (18 March 2020). Hydroxychloroquine, a less toxic derivative of chloroquine, is effective in inhibiting SARS-CoV-2 infection in vitro. Cell Discovery, 6 (16), https://doi.org/10.1038/s41421-020-0156-0 ARTICLE | FREE FULLTEXT PDF

- Wang, M., Cao, R., Zhang, H., Yang, X., Liu, J., Xu, M., Shi, Z., Hu, Z., Zhong, W., & Xiao, G. (18 March 2020). Remdesivir and chloroquine effectively inhibit the recently emerged novel coronavirus (2019-nCoV) in vitro. Cell Research, 30: 269–271. https://doi.org/10.1038/s41422-020-0282-0. ARTICLE | FREE FULLTEXT PDF

First study about chloroquine and hydoxychloroquine on SARS-Cov2 in vivo below. Unfortunately, it has some methodological flaws, see here and here, which hopefully will be corrected once the peer-reviewers will take a closer look at it. UPDATE [31-3-2020]: It seems the article is flawed in more than one way, with serious ethical issues (timeline of treatment doesn’t match the methods reported, patients appear and disappear from data points, graphs different depending on the venue, published in the same journal where the author is editor, no peer-review, no blind, no placebo, controls barely tested, plus, no reputable researcher should announce that they are the genius that cured COVID-19 on a YouTube video, particularly when they get to publish in 24 hours anyway).

Anyway, here it is:

- Gautret P, Lagier J-C, Parola P, Hoang VT, Meddeb L, Mailhe M, Doudier B, Courjon J, Giordanengo V, Esteves Vieira V, Tissot Dupont H,Colson SEP, Chabriere E, La Scola B, Rolain J-M, Brouqui P, Raoult D. (20 March 2020). Hydroxychloroquine and azithromycin as a treatment of COVID-19: results of an open-label non-randomized clinical trial. International Journal of Antimicrobial Agents, PII:S0924-8579(20)30099-6, https://doi.org/10.1016/j.ijantimicag.2020.105949. ARTICLE | FREE FULLTEXT PDF

These studies are also not peer reviewed or at the very least not properly peer reviewed. I say that so as to take them with a grain of salt. Not to criticize in the slightest. Because I do commend the speed with which these were done and published given the pandemic. Bravo to all the authors involved (except maybe the last one f it proves to be fraudulent). And also a thumbs up to the journals which made the data freely available in record time. Unfortunately, from these papers to a treatment we still have a long way to go.

By Neuronicus, 22 March 2020

Polite versus compassionate

After reading these two words, my first thought was that you can have a whole range of people, some compassionate but not polite (ahem, here, I hope), polite but not compassionate (we all know somebody like that, usually a family member or coworker), or compassionate and polite (I wish I was one of those) or neither (some Twitter and Facebook comments and profiles come to mind…).

It turns out that it is not the case. As in: usually, people are either one or another. Of course there are exceptions, but the majority of people that seem to score high on one trait, they tend to score low on the other.

Hirsh et al. (2010) gave a few questionnaires to over 600 mostly White Canadians of varying ages. The questionnaires measured personality, morality, and political preferences.

After regression analyses followed by factor analyses, which are statistical tools fancier than your run-of-the-mill correlation, the authors found out that the polite people tend to be politically conservatives, affirming support for the Canadian or U.S. Republican Parties, whereas the compassionate people more readily identified as liberals, i.e. Democrats.

Previous research has shown that political conservatives value order and traditionalism, in-group loyalty, purity, are resistant to change, and that they readily accept inequality. In contrast, political liberals value fairness, equality, compassion, justice, and are open to change. The findings of this study go well with the previous research because compassion relies on the perception of other’s distress, for which we have a better term called empathy. “Politeness, by contrast, appears to reflect the components of Agreeableness that are more closely linked to norm compliance and traditionalism” (p. 656). So it makes sense that people who are Polite value norm compliance and traditionalism and as such they end up being conservatives whereas people who are Compassionate value empathy and equality more than conformity, so they end up being liberals. Importantly, empathy is a strong predictor for prosocial behavior (see Damon W. & Eisenberg N (Eds.) (2006). Prosocial development, in Handbook of Child Psychology: Social, Emotional, and Personality Development, New York, NY, Wiley Pub.).

I want to stress that this paper was published in 2010, so the research was probably conducted a year or two prior to publication date, just in case you were wondering.

REFERENCE: Hirsh JB, DeYoung CG, Xu X, & Peterson JB. (May 2010, Epub 6 Apr 2010). Compassionate liberals and polite conservatives: associations of agreeableness with political ideology and moral values. Personality & Social Psychology Bulletin, 36(5):655-64. doi: 10.1177/0146167210366854, PMID: 20371797, DOI: 10.1177/0146167210366854. ABSTRACT

By Neuronicus, 24 February 2020

Teach handwriting in schools!

I have begun this blogpost many times. I have erased it many times. That is because the subject of today – handwriting – is very sensitive for me. Most of what I wrote and subsequently erased was a rant: angry at rimes, full of profanity at other times. The rest were paragraphs that can be easily categorized as pleading, bargaining, imploring to teach handwriting in American schools. Or, if they already do, to do it less chaotically, more seriously, more consistently, with a LOT more practice and hopefully before the child hits puberty.

Because, contrary to most educators’ beliefs, handwriting is not the same as typing. Nor is printing / manuscript writing the same as cursive writing, but that’s another kettle.

Somehow, sometime, a huge disjointment happened between scholarly researchers and educators. In medicine, the findings of researchers tend to take 10-15 years until they start to be believed and implemented in medical practice. In education… it seems that even findings cemented by Nobel prizes 100 years ago are alien to the ranks of educators. It didn’t used to be like that. I don’t know when educators became distrustful of data and science. When exactly did they start to substitute evidence with “feels right” and “it’s our school’s philosophy”. When did they start using “research shows… ” every other sentence without being able to produce a single item, name, citation, paper, anything of said research. When did the educators become so… uneducated. I could write (and rant!) a lot about the subject of handwriting or about what exactly a Masters in Education teaches the educators. But I’m so tired of it before I even begun because I’m doing it for a while now and it’s exhausting. It takes an incredible amount of effort, at least for me, to bring the matter of writing so genteelly, tactfully, and non-threateningly to the attention of the fragile ego of the powers that be in charge of the education of the next generation. Yes, yes, there must be rarae aves among the educators who actually teach and do listen to or read papers on education from peer-reviewed journals; but I didn’t find them. I wonder who the research in education is for, if neither the educators nor policy makers have any clue about it…

Here is another piece of education research which will probably go unremarked by the ones it is intended for, i.e. educators and policy makers. Mueller & Oppenheimer (2014) took a closer look at the note-taking habits of 65 Princeton and 260 UCLA students. The students were instructed to take notes in their usual classroom style from 5 x >15 min long TED talks, which were “interesting but not common knowledge” (p. 1160). Afterwards, the subjects completed a hard working-memory task and answered factual and conceptual questions about the content of the “lectures”.

The students who took notes in writing (I’ll call them longhanders) performed significantly better at conceptual questions about the lecture content that the ones who typed on laptops (typers). The researchers noticed that the typers tend to write verbatim what it’s being said, whereas the longhanders don’t do that, which corresponds directly with their performance. In their words,

“laptop note takers’ tendency to transcribe lectures verbatim rather than processing information and reframing it in their own words is detrimental to learning.” (Abstract).

Because typing is faster than writing, the typers can afford to not think of what they type and be in a full scribe mode with the brain elsewhere and not listening to a single word of the lecture (believe me, I know, both as a student and as a University professor). Contrary to that, the longhanders cannot write verbatim and must process the information to extract what’s relevant. In the words of cognitive psychologists everywhere and present in every cognitive psychology textbook written over the last 70 years: depth of processing facilitates learning. Maybe that could be taught in a Masters of Education…

Pet peeves aside, the next step in the today’s paper was to see if you force the typers to forgo the verbatim note-taking and do some information processing might improve learning. It did not, presumably because “the instruction to not take verbatim notes was completely ineffective at reducing verbatim content (p = .97)” (p. 1163).

The laptop typers did take more notes though, by word count. So in the next study, the researchers asked the question “If allowed to study their notes, will the typers benefit from their more voluminous notes and show better performance?” This time the researchers made 4 x 7-min long lectures on bats, bread, vaccines, and respiration and tested them 1 week alter. The results? The longhanders who studied performed the best. The verbatim typers performed the worst, particularly on conceptual versus factual questions, despite having more notes.

For the sake of truth and in the spirit of the overall objectivity of this blog, I should note that the paper is not very well done. It has many errors, some of which were statistical and corrected in a Corrigendum, some of which are methodological and can be addressed by a bigger study with more carefully parsed out controls and more controlled conditions, or at least using the same stimuli across studies. Nevertheless, at least one finding is robust as it was replicated across all their studies:

“In three studies, we found that students who took notes on laptops performed worse on conceptual questions than students who took notes longhand” (Abstract)

Teachers, teach handwriting! No more “Of course we teach writing, just…, just not now, not today, not this year, not so soon, perhaps not until the child is a teenager, not this grade, not my responsibility, not required, not me…”.

REFERENCE: Mueller, PA & Oppenheimer, DM (2014). The Pen Is Mightier Than the Keyboard: Advantages of Longhand Over Laptop Note Taking. Psychological Science, 25(6): 1159–1168. DOI: 10.1177/0956797614524581. ARTICLE | FULLTEXT PDF | NPR cover

By Neuronicus, 1 Sept. 2019

P. S. Some of my followers pointed me to a new preregistered study that failed to replicate this paper (thanks, followers!). Urry et al. (2019) found that the typers have more words and take notes verbatim, just as Mueller & Oppenheimer (2014) found, but this did not benefit the typers, as there wasn’t any difference between conditions when it came to learning without study.

The authors did not address the notion that “depth of processing facilitates learning” though, a notion which is now theory because it has been replicated ad nauseam in hundreds of thousands of papers. Perhaps both papers can be reconciled if a third study were to parse out the attention component of the experiments by, perhaps, introspection questionnaires. What I mean is that the typers can do mindless transcription and there is no depth of processing, resulting in the Mueller & Oppenheimer (2014) observation or they can actually pay attention to what they type and then there is depth of processing, in which case we have Urry et al. (2019) findings. But the longhanders have no choice but to pay attention because they cannot write verbatim, so we’re back to square one, in my mind, that longhanders will do better overall. Handwriting your notes is the safer bet for retention then, because your attention component is not voluntary, but required for the task, as it were, at hand.

REFERENCE: Urry, H. L. (2019, February 9). Don’t Ditch the Laptop Just Yet: A Direct Replication of Mueller and Oppenheimer’s (2014) Study 1 Plus Mini-Meta-Analyses Across Similar Studies. PsyArXiv. doi:10.31234/osf.io/vqyw6. FREE FULLTEXT PDF

By Neuronicus, 2 Sept. 2019

Pic of the day: African dogs sneeze to vote

Excerpt from Walker et al. (2017), p. 5:

Excerpt from Walker et al. (2017), p. 5:

“We also find an interaction between total sneezes and initiator POA in rallies (table 1) indicating that the number of sneezes required to initiate a collective movement differed according to the dominance of individuals involved in the rally. Specifically, we found that the likelihood of rally success increases with the dominance of the initiator (i.e. for lower POA categories) with lower-ranking initiators requiring more sneezes in the rally for it to be successful (figure 2d). In fact, our raw data and the resultant model showed that rallies never failed when a dominant (POA1) individual initiated and there were at least three sneezes, whereas rallies initiated by lower ranking individuals required a minimum of 10 sneezes to achieve the same level of success. Together these data suggest that wild dogs use a specific vocalization (the sneeze) along with a variable quorum response mechanism in the decision-making process. […]. We found that sneezes, a previously undocumented unvoiced sound in the species, are positively correlated with the likelihood of rally success preceding group movements and may function as a voting mechanism to establish group consensus in an otherwise despotically driven social system.”

REFERENCE: Walker RH, King AJ, McNutt JW, & Jordan NR (6 Sept. 2017). Sneeze to leave: African wild dogs (Lycaon pictus) use variable quorum thresholds facilitated by sneezes in collective decisions. Proceedings of the Royal Society B. Biological Sciences, 284(1862). pii: 20170347. doi: 10.1098/rspb.2017.0347. PMID: 28878054, PMCID: PMC5597819, DOI: 10.1098/rspb.2017.0347 ARTICLE | FREE FULLTEXT PDF

By Neuronicus, 1 August 2019

Education raises intelligence

Intelligence is a dubious concept in psychology and biology because it is difficult to define. In any science, something has a workable definition when it is described by unique testable operations or observations. But “intelligence” had eluded that workable definition, having gone through multiple transformations in the past hundred years or so, perhaps more than any other psychological construct (except “mind”). Despite Binet’s first claim more than a century ago that there is such a thing as IQ and he has a way to test for it, many psychologists and, to a lesser extent, neuroscientists are still trying to figure out what it is. Neuroscientists to a lesser extent because once the field as a whole could not agree upon a good definition, it moved on to the things that they can agree upon, i.e. executive functions.

Of course, I generalize trends to entire disciplines and I shouldn’t; not all psychology has a problem with operationalizations and replicability, just as not all neuroscientists are paragons of clarity and good science. In fact, the intelligence research seems to be rather vibrant, judging by the publications number. Who knows, maybe the psychologists have reached a consensus about what the thing is. I haven’t truly kept up with the IQ research, partly because I think the tests used for assessing it are flawed (therefore you don’t know what exactly you are measuring) and tailored for a small segment of the population (Western society, culturally embedded, English language conceptualizations etc.) and partly because the circularity of definitions (e.g. How do I know you are highly intelligent? You scored well at IQ tests. What is IQ? What the IQ tests measure).

But the final nail in the coffin of intelligence research for me was a very popular definition of Legg & Hutter in 2007: intelligence is “the ability to achieve goals”. So the poor, sick, and unlucky are just dumb? I find this definition incredibly insulting to the sheer diversity within the human species. Also, this definition is blatantly discriminatory, particularly towards the poor, whose lack of options, access to good education or to a plain healthy meal puts a serious brake on goal achievement. Alternately, there are people who want for nothing, having been born in opulence and fame but whose intellectual prowess seems to be lacking, to put it mildly, and owe their “goal achievement” to an accident of birth or circumstance. The fact that this definition is so accepted for human research soured me on the entire field. But I’m hopeful that the researchers will abandon this definition more suited for computer programs than for human beings; after all, paradigmatic shifts happen all the time.

In contrast, executive functions are more clearly defined. The one I like the most is that given by Banich (2009): “the set of abilities required to effortfully guide behavior toward a goal”. Not to achieve a goal, but to work toward a goal. With effort. Big difference.

So what are those abilities? As I said in the previous post, there are three core executive functions: inhibition/control (both behavioral and cognitive), working memory (the ability to temporarily hold information active), and cognitive flexibility (the ability to think about and switch between two different concepts simultaneously). From these three core executive functions, higher-order executive functions are built, such as reasoning (critical thinking), problem solving (decision-making) and planning.

Now I might have left you with the impression that intelligence = executive functioning and that wouldn’t be true. There is a clear correspondence between executive functioning and intelligence, but it is not a perfect correspondence and many a paper (and a book or two) have been written to parse out what is which. For me, the most compelling argument that executive functions and whatever it is that the IQ tests measure are at least partly distinct is that brain lesions that affect one may not affect the other. It is beyond the scope of this blogpost to analyze the differences and similarities between intelligence and executive functions. But to clear up just a bit of the confusion I will say this broad statement: executive functions are the foundation of intelligence.

There is another qualm I have with the psychological research into intelligence: a big number of psychologists believe intelligence is a fixed value. In other words, you are born with a certain amount of it and that’s it. It may vary a bit, depending on your life experiences, either increasing or decreasing the IQ, but by and large you’re in the same ball-park number. In contrast, most neuroscientists believe all executive functions can be drastically improved with training. All of them.

After this much semi-coherent rambling, here is the actual crux of the post: intelligence can be trained too. Or I should say the IQ can be raised with training. Ritchie & Tucker-Drob (2018) performed a meta-analysis looking at over 600,000 healthy participants’ IQ and their education. They confirmed a previously known observation that people who score higher at IQ tests complete more years of education. But why? Is it because highly intelligent people like to learn or because longer education increases IQ? After carefully and statistically analyzing 42 studies on the subject, the authors conclude that the more educated you are, the more intelligent you become. How much more? About 1 to 5 IQ points per 1 additional year of education, to be precise. Moreover, this effect persists for a lifetime; the gain in intelligence does not diminish with the passage of time or after exiting school.

This is a good paper, its conclusions are statistically robust and consistent. Anybody can check it out as this article is an open access paper, meaning that not only the text but its entire raw data, methods, everything about it is free for everybody.

For me, the conclusion is inescapable: if you think that we, as a society, or you, as an individual, would benefit from having more intelligent people around you, then you should support free access to good education. Not exactly where you thought I was going with this, eh ;)?

REFERENCE: Ritchie SJ & Tucker-Drob EM. (Aug, 2018, Epub 18 Jun 2018). How Much Does Education Improve Intelligence? A Meta-Analysis. Psychological Science, 29(8):1358-1369. PMID: 29911926, PMCID: PMC6088505, DOI: 10.1177/0956797618774253. ARTICLE | FREE FULLTEXT PDF | SUPPLEMENTAL DATA | Data, codebooks, scripts (Mplus and R), outputs

Nota bene: I’d been asked what that “1 additional year” of education means. Is it with every year of education you gain up to 5 IQ points? No, not quite. Assuming I started as normal IQ, then I’d be… 26 years of education (not counting postdoc) multiplied by let’s say 3 IQ points, makes me 178. Not bad, not bad at all. :))). No, what the authors mean is that they had access to, among other datasets, a huge cohort dataset from Norway from the moment when they increased the compulsory education by 2 years. So the researchers could look at the IQ tests of the people before and after the policy change, which were administered to all males at the same age when they entered compulsory military service. They saw the increase in 1 to 5 IQ points per each extra 1 year of education.

By Neuronicus, 14 July 2019

Love and the immune system

Valentine’s day is a day when we celebrate romantic love (well, some of us tend to) long before the famous greeting card company Hallmark was established. Fittingly, I found the perfect paper to cover for this occasion.

In the past couple of decades it became clear to scientists that there is no such thing as a mental experience that doesn’t have corresponding physical changes. Why should falling in love be any different? Several groups have already found that levels of some chemicals (oxytocin, cortisol, testosterone, nerve growth factor, etc.) change when we fall in love. There might be other changes as well. So Murray et al. (2019) decided to dive right into it and check how the immune system responds to love, if at all.

For two years, the researchers looked at certain markers in the immune system of 47 women aged 20 or so. They drew blood when the women reported to be “not in love (but in a new romantic relationship), newly in love, and out-of-love” (p. 6). Then they sent their samples to their university’s Core to toil over microarrays. Microarray techniques can be quickly summarized thusly: get a bunch of molecules of interest, in this case bits of single-stranded DNA, and stick them on a silicon plate or a glass slide in a specific order. Then you run your sample over it and what sticks, sticks, what not, not. Remember that DNA loves to be double stranded, so any single strand will stick to their counterpart, called complementary DNA. You put some fluorescent dye on your genes of interest and voilà, here you have an array of genes expressed in a certain type of tissue in a certain condition.

Talking about microarrays got me a bit on memory lane. When fMRI started to be a “must” in neuroscience, there followed a period when the science “market” was flooded by “salad” papers. We called them that because there were so many parts of the brain reported as “lit up” in a certain task that it made a veritable “salad of brain parts” out of which it was very difficult to figure out what’s going on. I swear that now that the fMRI field matured a bit and learned how to correct for multiple comparisons as well as to use some other fancy stats, the place of honor in the vegetable mix analogy has been relinquished to the ‘-omics’ studies. In other words, a big portion of the whole-genome or transcriptome studies became “salad” studies: too many things show up as statistically significant to make head or tail of it.

However, Murray et al. (2019) made a valiant – and successful – effort to figure out what those up- or down- regulated 61 gene transcripts in the immune system cells of 17 women falling in love actually mean. There’s quite a bit I am leaving out but, in a nutshell, love upregulated (that is “increased”) the expressions of genes involved in the innate immunity to viruses, presumably to facilitate sexual reproduction, the authors say.

The paper is well written and the authors graciously remind us that there are some limitations to the study. Nevertheless, this is another fine addition to the unbelievably fast growing body of knowledge regarding human body and behavior.

Pitty that this research was done only with women. I would have loved to see how men’s immune systems respond to falling in love.

REFERENCE: Murray DR, Haselton MG, Fales M, & Cole SW. (Feb 2019, Epub 2 Oct 2018). Falling in love is associated with immune system gene regulation. Psychoneuroendocrinology, Vol. 100, Pg. 120-126. doi: 10.1016/j.psyneuen.2018.09.043. PMID: 30299259, PMCID: PMC6333523 [Available on 2020-02-01], DOI: 10.1016/j.psyneuen.2018.09.043 ARTICLE

FYI: PMC6333523 [Available on 2020-02-01] means that the fulltext will be available for free to the public one year after the publication on the US governmental website PubMed (https://www.ncbi.nlm.nih.gov/pubmed/), no matter how much Elsevier will charge for it. Always, always, check the PMC library (https://www.ncbi.nlm.nih.gov/pmc/) on PubMed to see if a paper you saw in Nature or Elsevier is for free there because more often than you’d think it is.

PubMed = the U.S. National Institutes of Health’s National Library of Medicine (NIH/NLM), comprising of “more than 29 million citations for biomedical literature from MEDLINE, life science journals, and online books. Citations may include links to full-text content from PubMed Central and publisher web sites” .

PMC = “PubMed Central® (PMC) is a free fulltext archive of biomedical and life sciences journal literature at the U.S. National Institutes of Health’s National Library of Medicine (NIH/NLM)” with a whooping fulltext library of over 5 million papers and growing rapidly. Love PubMed!

By Neuronicus, 14 February 2019

Milk-producing spider

In biology, organizing living things in categories is called taxonomy. Such categories are established based on shared characteristics of the members. These characteristics were usually visual attributes. For example, a red-footed booby (it’s a bird, silly!) is obviously different than a blue-footed booby, so we put them in different categories, which Aristotle called in Greek something like species.

Biological taxonomy is very useful, not only to provide countless hours of fight (both verbal and physical!) for biologists, but to inform us of all sorts of unexpected relationships between living things. These relationships, in turn, can give us insights into our own evolution, but also the evolution of things inimical to us, like diseases, and, perhaps, their cure. Also extremely important, it allows scientists from all over the world to have a common language, thus maximizing information sharing and minimizing misunderstandings.

All well and good. And it was all well and good since Carl Linnaeus introduced his famous taxonomy system in the 18th Century, the one we still use today with species, genus, family, order, and kingdom. Then we figured out how to map the DNAs of things around us and this information threw out the window a lot of Linnean classifications. Because it turns out that some things that look similar are not genetically similar; likewise, some living things that we thought are very different from one another, turned out that, genetically speaking, they are not so different.

You will say, then, alright, out with visual taxonomy, in with phylogenetic taxonomy. This would be absolutely peachy for a minority of organisms of the planet, like animals and plants, but a nightmare in the more promiscuous organisms who have no problem swapping bits of DNA back and forth, like some bacteria, so you don’t know anymore who’s who. And don’t even get me started on the viruses which we are still trying to figure out whether or not they are alive in the first place.

When I grew up there were 5 regna or kingdoms in our tree of life – Monera, Protista, Fungi, Plantae, Animalia – each with very distinctive characteristics. Likewise, the class Mammalia from the Animal Kingdom was characterized by the females feeding their offspring with milk from mammary glands. Period. No confusion. But now I have no idea – nor do many other biologists, rest assured – how many domains or kingdoms or empires we have, nor even what the definition of a species is anymore!

As if that’s not enough, even those Linnean characteristics that we thought set in stone are amenable to change. Which is good, shows the progress of science. But I didn’t think that something like the definition of mammal would change. Mammals are organisms whose females feed their offspring with milk from mammary glands, as I vouchsafed above. Pretty straightforward. And not spiders. Let me be clear on this: spiders did not feature in my – or anyone’s! – definition of mammals.

Until Chen et al. (2018) published their weird article a couple of weeks ago. The abstract is free for all to see and states that the females of a jumping spider species feed their young with milk secreted by their body until the age of subadulthood. Mothers continue to offer parental care past the maturity threshold. The milk is necessary for the spiderlings because without it they die. That’s all.

I read the whole paper since it was only 4 pages of it and here are some more details about their discovery. The species of spider they looked at is Toxeus magnus, a jumping spider that looks like an ant. The mother produces milk from her epigastric furrow and deposits it on the nest floor and walls from where the spiderlings ingest it (0-7 days). After the first week of this, the spiderlings suck the milk direct from the mother’s body and continue to do so for the next two weeks (7-20 days) when they start leaving the nest and forage for themselves. But they return and for the next period (20-40 days) they get their food both from the mother’s milk and from independent foraging. Spiderlings get weaned by day 40, but they still come home to sleep at night. At day 52 they are officially considered adults. Interestingly, “although the mother apparently treated all juveniles the same, only daughters were allowed to return to the breeding nest after sexual maturity. Adult sons were attacked if they tried to return. This may reduce inbreeding depression, which is considered to be a major selective agent for the evolution of mating systems (p. 1053).”

During all this time, including during the emergence into adulthood of the offsprings, the mother also supplied house maintenance, carrying out her children’s exuviae (shed exoskeletons) and repairing the nest.

The authors then did a series of experiments to see what role does the nursing and other maternal care at different stages play in the fitness and survival of the offsprings. Blocking the mother’s milk production with correction fluid immediately after hatching killed all the spiderlings, showing that they are completely dependent on the mother’s milk. Removing the mother after the spiderlings start foraging (day 20) drastically reduces survivorship and body size, showing that mother’s care is essential for her offsprings’ success. Moreover, the mother taking care of the nest and keeping it clean reduced the occurrence of parasite infections on the juveniles.

The authors analyzed the milk and it’s highly nutritious: “spider milk total sugar content was 2.0 mg/ml, total fat 5.3 mg/ml, and total protein 123.9 mg/ml, with the protein content around four times that of cow’s milk (p. 1053)”.

Speechless I am. Good for the spider, I guess. Spider milk will have exorbitant costs (Apparently, a slight finger pressure on the milk-secreting region makes the mother spider secret the milk, not at all unlike the human mother). Spiderlings die without the mother’s milk. Responsible farming? Spider milker qualifications? I’m gonna lay down, I got a headache.

REFERENCE: Chen Z, Corlett RT, Jiao X, Liu SJ, Charles-Dominique T, Zhang S, Li H, Lai R, Long C, & Quan RC (30 Nov. 2018). Prolonged milk provisioning in a jumping spider. Science, 362(6418):1052-1055. PMID: 30498127, DOI: 10.1126/science.aat3692. ARTICLE | Supplemental info (check out the videos)

By Neuronicus, 13 December 2018

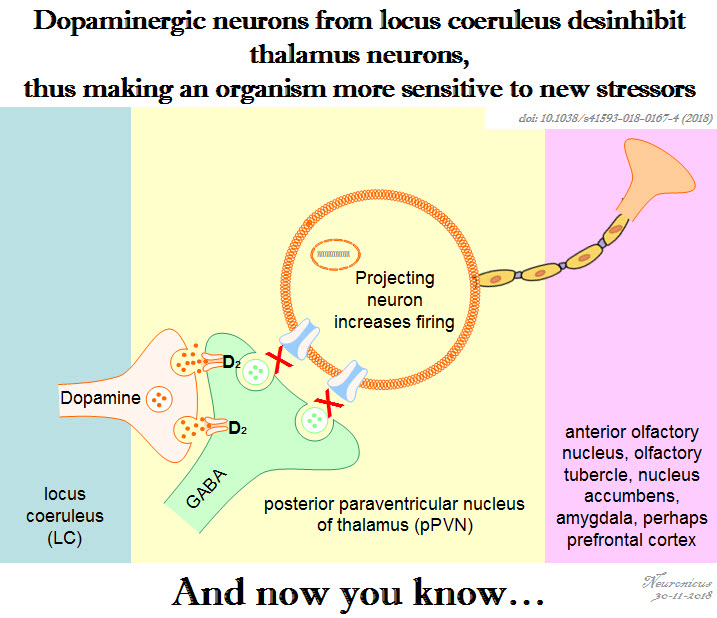

Pic of the day: Dopamine from a non-dopamine place

Reference: Beas BS, Wright BJ, Skirzewski M, Leng Y, Hyun JH, Koita O, Ringelberg N, Kwon HB, Buonanno A, & Penzo MA (Jul 2018, Epub 18 Jun 2018). The locus coeruleus drives disinhibition in the midline thalamus via a dopaminergic mechanism. Nature Neuroscience,21(7):963-973. PMID: 29915192, PMCID: PMC6035776 [Available on 2018-12-18], DOI:10.1038/s41593-018-0167-4. ARTICLE

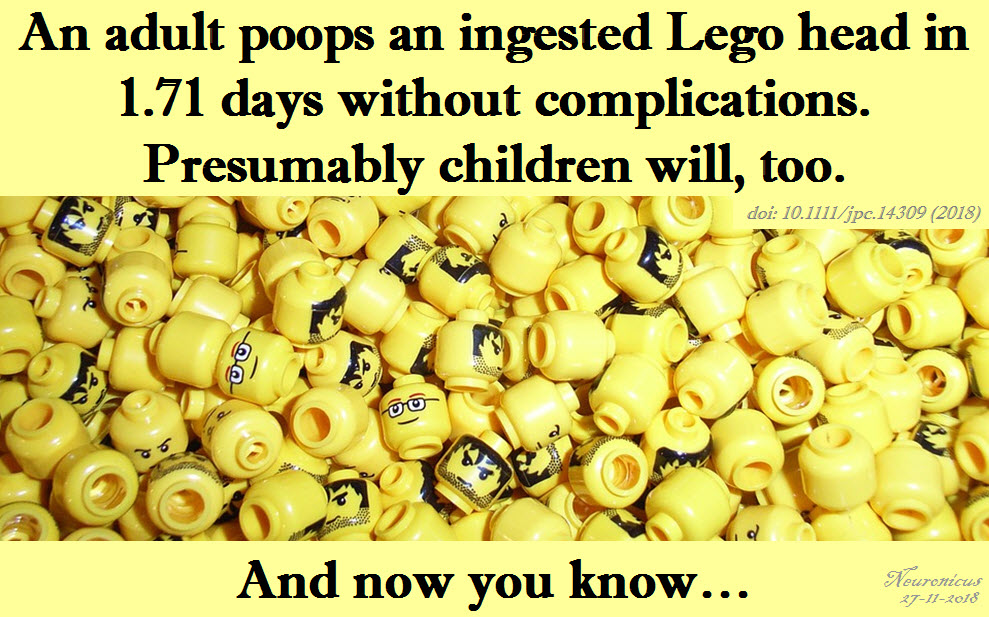

Pooping Legos

Yeah, alright… uhm… how exactly should I approach this paper? I’d better just dive into it (oh boy! I shouldn’t have said that).

The authors of this paper were adult health-care professionals in the pediatric field. These three males and three females were also the participants in the study. They kept a poop-diary noting the frequency and volume of bowel movements (Did they poop directly on a scale or did they have to scoop it out in a bag?). The researchers/subjects developed a Stool Hardness and Transit (SHAT) metric to… um.. “standardize bowel habit between participants” (p. 1). In other words, to put the participants’ bowel movements on the same level (please, no need to visualize, I am still stuck at the poop-on-a-scale phase), the authors looked – quite literally – at the consistency of the poop and gave it a rating. I wonder if they checked for inter-rater reliability… meaning did they check each other’s poops?…

Then the researchers/subjects ingested a Lego figurine head, on purpose, somewhere between 7 and 9 a.m. Then they timed how much time it took to exit. The FART score (Found and Retrieved Time) was 1.71 days. “There was some evidence that females may be more accomplished at searching through their stools than males, but this could not be statistically validated” due to the small sample size, if not the poops’. It took 1 to 3 stools for the object to be found, although poor subject B had to search through his 13 stools over a period of 2 weeks to no avail. I suppose that’s what you get if you miss the target, even if you have a PhD.

The pre-SHAT and SHAT score of the participants did not differ, suggesting that the Lego head did not alter the poop consistency (I got nothin’ here; the authors’ acronyms are sufficient scatological allusion). From a statistical standpoint, the one who couldn’t find his head in his poop (!) should not have been included in the pre-SHAT score group. Serves him right.

I wonder how they searched through the poop… A knife? A sieve? A squashing spatula? Gloved hands? Were they floaters or did the poop sink at the base of the toilet? Then how was it retrieved? Did the researchers have to poop in a bucket so no loss of data should occur? Upon direct experimentation 1 minute ago, I vouchsafe that a Lego head is completely buoyant. Would that affect the floatability of the stool in question? That’s what I’d like to know. Although, to be fair, no, that’s not what I want to know; what I desire the most is a far larger sample size so some serious stats can be conducted. With different Lego parts. So they can poop bricks. Or, as suggested by the authors, “one study arm including swallowing a Lego figurine holding a coin” (p. 3) so one can draw parallels between Lego ingestion and coin ingestion research, the latter being, apparently, far more prevalent. So many questions that still need to be answered! More research is needed, if only grants would be so… regular as the raw data.

The paper, albeit short and to the point, fills a gap in our scatological knowledge database (Oh dear Lord, stop me!). The aim of the paper was to show that ingested objects by children tend to pass without a problem. Also of value, the paper asks pediatricians to counsel the parents to not search for the object in the faeces to prove object retrieval because “if an experienced clinician with a PhD is unable to adequately find objects in their own stool, it seems clear that we should not be expecting parents to do so” (p. 3). Seems fair.

REFERENCE: Tagg, A., Roland, D., Leo, G. S., Knight, K., Goldstein, H., Davis, T. and Don’t Forget The Bubbles (22 November 2018). Everything is awesome: Don’t forget the Lego. Journal of Paediatrics and Child Health, doi: 10.1111/jpc.14309. ARTICLE

By Neuronicus, 27 November 2017

Apathy

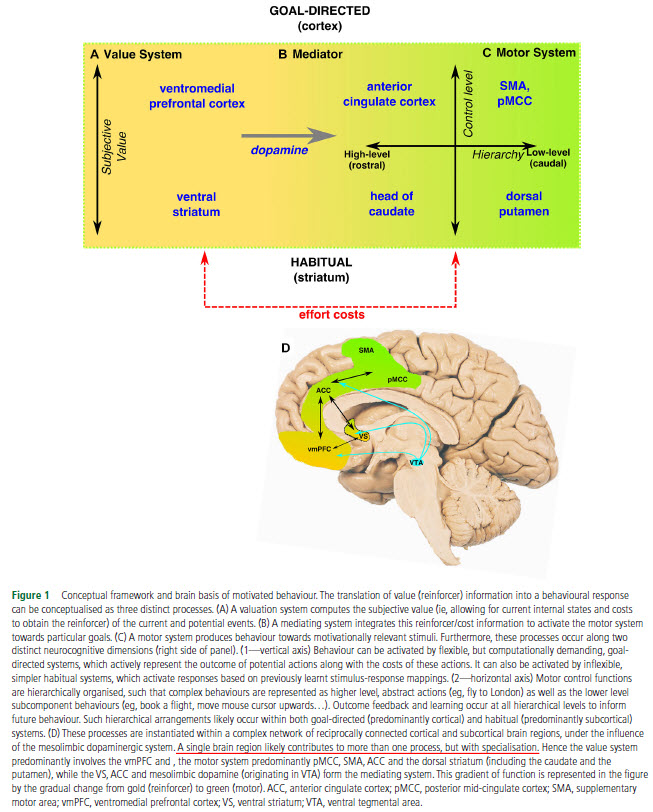

Le Heron et al. (2018) defines apathy as a marked reduction in goal-directed behavior. But in order to move, one must be motivated to do so. Therefore, a generalized form of impaired motivation also hallmarks apathy.

The authors compiled for us a nice mini-review combing through the literature of motivation in order to identify, if possible, the neurobiological mechanism(s) of apathy. First, they go very succinctly though the neuroscience of motivated behavior. Very succinctly, because there are literally hundreds of thousands of worthwhile pages out there on this subject. Although there are several other models proposed out-there, the authors’ new model on motivation includes the usual suspects (dopamine, striatum, prefrontal cortex, anterior cingulate cortex) and you can see it in the Fig. 1.

After this intro, the authors go on to showcasing findings from the effort-based decision-making field, which suggest that the dopamine-producing neurons from ventral tegmental area (VTA) are fundamental in choosing an action that requires high-effort for high-reward versus a low-effort for low-reward. Contrary to what Wikipedia tells you, a reduction, not an increase, in mesolimbic dopamine is associated with apathy, i.e. preferring a low-effort for low-reward activity.

Next, the authors focus on why are the apathetic… apathetic? Basically, they asked the question: “For the apathetic, is the reward too little or is the effort too high?” By looking at some cleverly designed experiments destined to parse out sensitivity to reward versus sensitivity to effort costs, the authors conclude that the apathetics are indeed sensitive to the reward, meaning they don’t find the rewards good enough for them to move. Therefore, the answer is the reward is too little.

In a nutshell, apathetic people think “It’s not worth it, so I’m not willing to put in the effort to get it”. But if somehow they are made to judge the reward as good enough, to think “it’s worth it”, they are willing to work their darndest to get it, like everybody else.

The application of this is that in order to get people off the couch and do stuff you have to present them a reward that they consider worth moving for, in other words to motivate them. To which any practicing psychologist or counselor would say: “Duh! We’ve been saying that for ages. Glad that neuroscience finally caught up”. Because it’s easy to say people need to get motivated, but much much harder to figure out how.

This was a difficult write for me and even I recognize the quality of this blogpost as crappy. That’s because, more or less, this paper is within my narrow specialization field. There are points where I disagree with the authors (some definitions of terms), there are points where things are way more nuanced than presented (dopamine findings in reward), and finally there are personal preferences (the interpretation of data from Parkinson’s disease studies). Plus, Salamone (the second-to-last author) is a big name in dopamine research, meaning I’m familiar with his past 20 years or so worth of publications, so I can infer certain salient implications (one dopamine hypothesis is about saliency, get it?).

It’s an interesting paper, but it’s definitely written for the specialist. Hurray (or boo, whatever would be your preference) for another model of dopamine function(s).

REFERENCE: Le Heron C, Holroyd CB, Salamone J, & Husain M (26 Oct 2018, Epub ahead of print). Brain mechanisms underlying apathy. Journal of Neurology, Neurosurgery & Psychiatry. pii: jnnp-2018-318265. doi: 10.1136/jnnp-2018-318265. PMID: 30366958 ARTICLE | FREE FULLTEXT PDF

By Neuronicus, 24 November 2018

No licorice for you

I never liked licorice. And that turns out to be a good thing. Given that Halloween just happened yesterday and licorice candy is still sold in USA, I remembered the FDA’s warning against consumption of licorice from a year ago.

So I dug out the data supporting this recommendation. It’s a review paper published 6 years ago by Omar et al. (2012) meant to raise awareness of the risks of licorice consumption and to urge FDA to take regulatory steps.

The active ingredient in licorice is glycyrrhizic acid. This is hydrolyzed to glycyrrhetic acid by intestinal bacteria possessing a specialized ß-glucuronidase. Glycyrrhetic acid, in turn, inhibits 11-ß-hydroxysteroid dehydrogenase (11-ß-HSD) which results in cortisol activity increase, which binds to the mineralcorticoid receptors in the kidneys, leading to low potassium levels (called hypokalemia). Additionally, licorice components can also bind directly to the mineralcorticoid receptors.

Eating 2 ounces of black licorice a day for at least two weeks (which is roughly equivalent to 2 mg/kg/day of pure glycyrrhizinic acid) is enough to produce disturbances in the following systems:

- cardiovascular (hypertension, arrhythmias, heart failure, edemas)

- neurological (stroke, myoclonia, ocular deficits, Carpal tunnel, muscle weakness)

- renal (low potassium, myoglobinuria, alkalosis)

- and others

Although everybody is affected by licorice consumption, the most vulnerable populations are those over 40 years old, those who don’t poop every day, or are hypertensive, anorexic or of the female persuasion.

Unfortunately, even if one doesn’t enjoy licorice candy, they still can consume it as it is used as a sweetener or flavoring agent in many foods, like sodas and snacks. It is also used in naturopathic crap, herbal remedies, and other dangerous scams of that ilk. So beware of licorice and read the label, assuming the makers label it.

REFERENCE: Omar HR, Komarova I, El-Ghonemi M, Fathy A, Rashad R, Abdelmalak HD, Yerramadha MR, Ali Y, Helal E, & Camporesi EM. (Aug 2012). Licorice abuse: time to send a warning message. Therapeutic Advances in Endocrinology and Metabolism, 3(4):125-38. PMID: 23185686, PMCID: PMC3498851, DOI: 10.1177/2042018812454322. ARTICLE | FREE FULLTEXT PDF

By Neuronicus, 1 November 2018

Locus Coeruleus in mania

From all the mental disorders, bipolar disorder, a.k.a. manic-depressive disorder, has the highest risk for suicide attempt and completion. If the thought of suicide crosses your mind, stop reading this, it’s not that important; what’s important is for you to call the toll-free National Suicide Prevention Lifeline at 1-800-273-TALK (8255).

The bipolar disorder is defined by alternating manic episodes of elevated mood, activity, excitation, and energy with episodes of depression characterized by feelings of deep sadness, hopelessness, worthlessness, low energy, and decreased activity. It is also a more common disease than people usually expect, affecting about 1% or more of the world population. That means almost 80 million people! Therefore, it’s imperative to find out what’s causing it so we can treat it.

Unfortunately, the disease is very complex, with many brain parts, brain chemicals, and genes involved in its pathology. We don’t even fully comprehend how the best medication we have to lower the risk of suicide, lithium, works. The good news is the neuroscientists haven’t given up, they are grinding at it, and with every study we get closer to subduing this monster.

One such study freshly published last month, Cao et al. (2018), looked at a semi-obscure membrane protein, ErbB4. The protein is a tyrosine kinase receptor, which is a bit unfortunate because this means is involved in ubiquitous cellular signaling, making it harder to find its exact role in a specific disorder. Indeed, ErbB4 has been found to play a role in neural development, schizophrenia, epilepsy, even ALS (Lou Gehrig’s disease).

Given that ErbB4 is found in some neurons that are involved in bipolar and mutations in its gene are also found in some people with bipolar, Cao et al. (2018) sought to find out more about it.

First, they produced mice that lacked the gene coding for ErbB4 in neurons from locus coeruleus, the part of the brain that produces norepinephrine out of dopamine, better known for the European audience as nor-adrenaline. The mutant mice had a lot more norepinephrine and dopamine in their brains, which correlated with mania-like behaviors. You might have noticed that the term used was ‘manic-like’ and not ‘manic’ because we don’t know for sure how the mice feel; instead, we can see how they behave and from that infer how they feel. So the researchers put the mice thorough a battery of behavioral tests and observed that the mutant mice were hyperactive, showed less anxious and depressed behaviors, and they liked their sugary drink more than their normal counterparts, which, taken together, are indices of mania.

Next, through a series of electrophysiological experiments, the scientists found that the mechanism through which the absence of ErbB4 leads to mania is making another receptor, called NMDA, in that brain region more active. When this receptor is hyperactive, it causes neurons to fire, releasing their norepinephrine. But if given lithium, the mutant mice behaved like normal mice. Correspondingly, they also had a normal-behaving NMDA receptor, which led to normal firing of the noradrenergic neurons.

So the mechanism looks like this (Jargon alert!):

No ErbB4 –> ↑ NR2B NMDAR subunit –> hyperactive NMDAR –> ↑ neuron firing –> ↑ catecholamines –> mania.

In conclusion, another piece of the bipolar puzzle has been uncovered. The next obvious step will be for the researchers to figure out a medicine that targets ErbB4 and see if it could treat bipolar disorder. Good paper!

P.S. If you’re not familiar with the journal eLife, go and check it out. The journal offers for every study a half-page summary of the findings destined for the lay audience, called eLife digest. I’ve seen this practice in other journals, but this one is generally very well written and truly for the lay audience and the non-specialist. Something of what I try to do here, minus the personal remarks and in parenthesis metacognitions that you’ll find in most of my posts. In short, the eLife digest is masterly done. As my continuous struggles on this blog show, it is tremendously difficult for a scientist to write concisely, precisely, and jargonless at the same time. But eLife is doing it. Check it out. Plus, if you care to take a look on how science is done and published, eLife publishes all the editor’s rejection notes, all the reviewers’ comments, and all the author responses for a particular paper. Reading those is truly a teaching moment.

REFERENCE: Cao SX, Zhang Y, Hu XY, Hong B, Sun P, He HY, Geng HY, Bao AM, Duan SM, Yang JM, Gao TM, Lian H, Li XM (4 Sept 2018). ErbB4 deletion in noradrenergic neurons in the locus coeruleus induces mania-like behavior via elevated catecholamines. Elife, 7. pii: e39907. doi: 10.7554/eLife.39907. PMID: 30179154 ARTICLE | FREE FULLTEXT PDF

By Neuronicus, 14 October 2018

The Mom Brain

Recently, I read an opinion titled When I Became A Mother, Feminism Let Me Down. The gist of it was that some (not all!) feminists, while empowering women and girls to be anything they want to be and to do anything a man or a boy does, they fail in uplifting the motherhood aspect of a woman’s life, should she choose to become a mother. In other words, even (or especially, in some cases) feminists look down on the women who chose to switch from a paid job and professional career to an unpaid stay-at-home mom career, as if being a mother is somehow beneath what a woman can be and can achieve. As if raising the next generation of humans to be rational, informed, well-behaved social actors instead of ignorant brutal egomaniacs is a trifling matter, not to be compared with the responsibilities and struggles of a CEO position.

Patriarchy notwithstanding, a woman can do anything a man can. And more. The ‘more’ refers to, naturally, motherhood. Evidently, fatherhood is also a thing. But the changes that happen in a mother’s brain and body during pregnancy, breastfeeding, and postpartum periods are significantly more profound than whatever happens to the most loving and caring and involved father.

Kim (2016) bundled some of these changes in a nice review, showing how these drastic and dramatic alterations actually have an adaptive function, preparing the mother for parenting. Equally important, some of the brain plasticity is permanent. The body might spring back into shape if the mother is young or puts into it a devilishly large amount of effort, but some brain changes are there to stay. Not all, though.

One of the most pervasive findings in motherhood studies is that hormones whose production is increased during pregnancy and postpartum, like oxytocin and dopamine, sensitize the fear circuit in the brain. During the second trimester of pregnancy and particularly during the third, expectant mothers start to be hypervigilent and hypersensitive to threats and to angry faces. A higher anxiety state is characterized, among other things, by preferentially scanning for threats and other bad stuff. Threats mean anything from the improbable tiger to the 1 in a million chance for the baby to be dropped by grandma to the slightly warmer forehead or the weirdly colored poopy diaper. The sensitization of the fear circuit, out of which the amygdala is an essential part, is adaptive because it makes the mother more likely to not miss or ignore her baby’s cry, thus attending to his or her needs. Also, attention to potential threats is conducive to a better protection of the helpless infant from real dangers. This hypersensitivity usually lasts 6 to 12 months after childbirth, but it can last lifetime in females already predisposed to anxiety or exposed to more stressful events than average.

Many new mothers worry if they will be able to love their child as they don’t feel this all-consuming love other women rave about pre- or during pregnancy. Rest assured ladies, nature has your back. And your baby’s. Because as soon as you give birth, dopamine and oxytocin flood the body and the brain and in so doing they modify the reward motivational circuit, making new mothers literally obsessed with their newborn. The method of giving birth is inconsequential, as no differences in attachment have been noted (this is from a different study). Do not mess with mother’s love! It’s hardwired.

Another change happens to the brain structures underlying social information processing, like the insula or fusiform gyrus, making mothers more adept at self-motoring, reflection, and empathy. Which is a rapid transformation, without which a mother may be less accurate in understanding the needs, mental state, and social cues of the very undeveloped ball of snot and barf that is the human infant (I said that affectionately, I promise).

In order to deal with all these internal changes and the external pressures of being a new mom the brain has to put up some coping mechanisms. (Did you know, non-parents, that for the first months of their newborn lives, the mothers who breastfeed must do so at least every 4 hours? Can you imagine how berserk with sleep deprivation you would be after 4 months without a single night of full sleep but only catnaps?). Some would be surprised to find out – not mothers, though, I’m sure – that “new mothers exhibit enhanced neural activation in the emotion regulation circuit including the anterior cingulate cortex, and the medial and lateral prefrontal cortex” (p. 50). Which means that new moms are actually better at controlling their emotions, particularly at regulating negative emotional reactions. Shocking, eh?

Finally, it appears that very few parts of the brain are spared from this overhaul as the entire brain of the mother is first reduced in size and then it grows back, reorganized. Yeah, isn’t that weird? During pregnancy the brain shrinks, being at its lowest during childbirth and then starts to grow again, reaching its pre-pregnancy size 6 months after childbirth! And when it’s back, it’s different. The brain parts heavily involved in parenting, like the amygdala involved in the anxiety, the insula and superior temporal gyrus involved in social information processing and the anterior cingulate gyrus involved in emotional regulation, all these show increased gray matter volume. And many other brain structures that I didn’t list. One brain structure is rarely involved only in one thing so the question is (well, one of them) what else is changed about the mothers, in addition to their increased ability to parent?

I need to add a note here: the changes that Kim (2016) talks about are averaged. That means some women get changed more, some less. There is variability in plasticity, which should be a pleonasm. There is also variability in the human population, as any mother attending a school parents’ night-out can attest. Some mothers are paranoid with fear and overprotective, others are more laissez faire when it comes to eating from the floor.

But SOME changes do occur in all mothers’ brains and bodies. For example, all new mothers exhibit a heightened attention to threats and subsequent raised levels of anxiety. But when does heightened attention to threats become debilitating anxiety? Thanks to more understanding and tolerance about these changes, more and more women feel more comfortable reporting negative feelings after childbirth so that now we know that postpartum depression, which happens to 60 – 80% of mothers, is a serious matter. A serious matter that needs serious attention from both professionals and the immediate social circle of the mother, both for her sake as well as her infant’s. Don’t get me wrong, we – both males and females – still have a long way ahead of us to scientifically understand and to socially accept the mother brain, but these studies are a great start. They acknowledge what all mothers know: that they are different after childbirth than the way they were before. Now we have to figure out how are they different and what can we do to make everyone’s lives better.

Kim (2016) is an OK review, a real easy read, I recommend it to the non-specialists wholeheartedly; you just have to skip the name of the brain parts and the rest is pretty clear. It is also a very short review, which will help with reader fatigue. The caveat of that is that it doesn’t include a whole lotta studies, nor does it go in detail on the implications of what the handful cited have found, but you’ll get the gist of it. There is a vastly more thorough literature if one would include animal studies that the author, curiously, did not include. I know that a mouse is not a chimp is not a human, but all three of us are mammals, and social mammals at that. Surely, there is enough biological overlap so extrapolations are warranted, even if partially. Nevertheless, it’s a good start for those who want to know a bit about the changes motherhood does to the brain, behavior, thoughts, and feelings.

Corroborated with what I already know about the neuroscience of maternity, my favourite takeaway is this: new moms are not crazy. They can’t help most of these changes. It’s biology, you see. So go easy on new moms. Moms, also go easy on yourselves and know that, whether they want to share or not, the other moms probably go through the same stuff. The other moms are doing better than you are either. You’re not alone. And if that overactive threat circuit gives you problems, i.e. you feel overwhelmed, it’s OK to ask for help. And if you don’t get it, ask for it again and again until you do. That takes courage, that’s empowerment.

P. S. The paper doesn’t look like it’s peer-reviewed. Yes, I know the peer-reviewing publication system is flawed, I’ve been on the receiving end of it myself, but it’s been drilled into my skull that it’s important, flawed as it is, so I thought to mention it.

REFERENCE: Kim, P. (Sept. 2016). Human Maternal Brain Plasticity: Adaptation to Parenting, New Directions for Child and Adolescent Development, (153): 47–58. PMCID: PMC5667351, doi: 10.1002/cad.20168. ARTICLE | FREE FULLTEXT PDF

By Neuronicus, 28 September 2018

The Benefits of Vacation

My prolonged Internet absence from the last month or so was due to a prolonged vacation. In Europe. Which I loved. Both the vacation and the Europe. Y’all, people, young and old, listen to me: do not neglect vacations for they strengthen the body, nourish the soul, and embolden the spirit.

More pragmatically, vacations lower the stress level. Yes, even the stressful vacations lower the stress level, because the acute stress effects of “My room is not ready yet” / “Jimmy puked in the car” / “Airline lost my luggage” are temporary and physiologically different from the chronic stress effects of “I’ll lose my job if I don’t meet these deadlines” / “I hate my job but I can’t quit because I need health insurance” / “I’m worried for my child’s safety” / “My kids will suffer if I get a divorce” / “I can’t make the rent this month”.

Chronic stress results in a whole slew of real nasties, like cognitive, learning, and memory impairments, behavioral changes, issues with impulse control, immune system problems, weight gain, cardiovascular disease and so on and so on and so on. Even death. As I told my students countless of times, chronic stress to the body is as real and physical as a punch in the stomach but far more dangerous. So take a vacation as often as you can. Even a few days of total disconnect help tremendously.

There are literally thousands of peer-reviewed papers out there that describe the ways in which stress produces all those bad things, but not so many papers about the effects of vacations. I suspect this is due to the inherent difficulty in accounting for the countless environmental variables that can influence one’s vacation and its outcomes, whereas identifying and characterizing stressors is much easier. In other words, lack of experimental control leads to paucity of good data. Nevertheless, from this paucity, Chen & Petrick (2013) carefully selected 98 papers from both academic and nonacademic publications about the benefits of travel vacations.

These are my take-home bullet-points:

- vacation effects last no more than a month

- vacations reduce both the subjective perception of stress and the objective measurement of it (salivary cortisol)

- people feel happier after taking a vacation

- there are some people who do not relax in a vacation, presumably because they cannot ‘detach’ themselves from the stressors in their everyday life (long story here why some people can’t let go of problems)

- vacations lower the occurrence of cardiovascular disease

- vacations decrease work-related stress, work absenteeism, & work burnout

- vacations increase job performance

- the more you do on a vacation the better you feel, particularly if you’re older

- you benefit more if you do new things or go to new places instead of just staying home

- vacations increase overall life satisfaction

Happy vacationing!

REFERENCE: Chen, C-C & Petrick, JF (Nov. 2013, Epub 17 Jul. 2013). Health and Wellness Benefits of Travel Experiences: A Literature Review, Journal of Travel Research, 52(6):709-719. doi: 10.1177/0047287513496477. ARTICLE | FULLTEXT PDF via ResearchGate.

By Neuronicus, 20 July 2018

Is piracy the same as stealing?

Exactly 317 years ago, Captain William Kidd was tried and executed for piracy. Whether or not he was a pirate is debatable but what is not under dispute is that people do like to pirate. Throughout the human history, whenever there was opportunity, there was also theft. Wait…, is theft the same as piracy?

If we talk about Captain “Arrr… me mateys” sailing the high seas under the “Jolly Roger” flag, there is no legal or ethical dispute that piracy is equivalent with theft. But what about today’s digital piracy? Despite what the grieved parties may vociferously advocate, digital piracy is not theft because what is being stolen is a copy of the goodie, not the goodie itself therefore it is an infringement and not an actual theft. That’s from a legal standpoint. Ethically though…

For Eres et al. (2016), theft is theft, whether the object of thievery is tangible or not. So why are people who have no problem pirating information from the internet squeamish when it comes to shoplifting the same item?

First, is it true that people are more likely to steal intangible things than physical objects? A questionnaire involving 127 young adults revealed that yes, people of both genders are more likely to steal intangible items, regardless if they (the items) are cheap or expensive or the company that owned the item is big or small. Older people were less likely to pirate and those who already pirated were more likely to do so in the future.

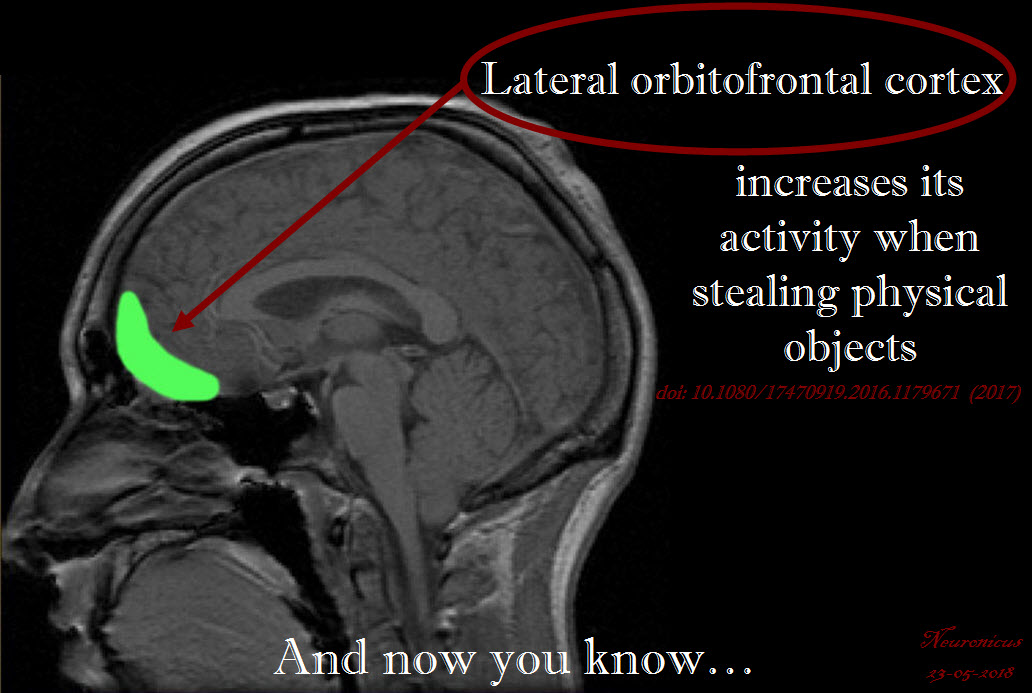

In a different experiment, Eres et al. (2016) stuck 35 people in the fMRI and asked them to imagine the tangibility (e.g., CD, Book) or intangibility (e.g., .pdf, .avi) of some items (e.g., book, music, movie, software). Then they asked the participants how they would feel after they would steal or purchase these items.

People were inclined to feel more guilty if the item was illegally obtained, particularly if the object was tangible, proving that, at least from an emotional point of view, stealing and infringement are two different things. An increase in the activation the left lateral orbitofrontal cortex (OFC) was seen when the illegally obtained item was tangible. Lateral OFC is a brain area known for its involvement in evaluating the nature of punishment and displeasurable information. The more sensitive to punishment a person is, the more likely it is to be morally sensitive as well.

Or, as the authors put it, it is more difficult to imagine intangible things vs. physical objects and that “difficulty in representing intangible items leads to less moral sensitivity when stealing these items” (p. 374). Physical items are, well…, more physical, hence, possibly, demanding a more immediate attention, at least evolutionarily speaking.

(Divergent thought. Some studies found that religious people are less socially moral than non-religious. Could that be because for the religious the punishment for a social transgression is non-existent if they repent enough whereas for the non-religious the punishment is immediate and factual?)

Like most social neuroscience imaging studies, this one lacks ecological validity (i.e., people imagined stealing, they did not actually steal), a lacuna that the authors are gracious enough to admit. Another drawback of imaging studies is the small sample size, which is to blame, the authors believe, for failing to see a correlation between the guilt score and brain activation, which other studies apparently have shown.

A simple, interesting paper providing food for thought not only for the psychologists, but for the law makers and philosophers as well. I do not believe that stealing and infringement are the same. Legally they are not, now we know that emotionally they are not either, so shouldn’t they also be separated morally?

And if so, should we punish people more or less for stealing intangible things? Intuitively, because I too have a left OFC that’s less active when talking about transgressing social norms involving intangible things, I think that punishment for copyright infringement should be less than that for stealing physical objects of equivalent value.

But value…, well, that’s where it gets complicated, isn’t it? Because just as intangible as an .mp3 is the dignity of a fellow human, par example. What price should we put on that? What punishment should we deliver to those robbing human dignity with impunity?

Ah, intangibility… it gets you coming and going.

I got on this thieving intangibles dilemma because I’m re-re-re-re-re-reading Feet of Clay, a Discworld novel by Terry Pratchett and this quote from it stuck in my mind:

“Vimes reached behind the desk and picked up a faded copy of Twurp’s Peerage or, as he personally thought of it, the guide to the criminal classes. You wouldn’t find slum dwellers in these pages, but you would find their landlords. And, while it was regarded as pretty good evidence of criminality to be living in a slum, for some reason owning a whole street of them merely got you invited to the very best social occasions.”

REFERENCE: Eres R, Louis WR, & Molenberghs P (Epub 8 May 2016, Pub Aug 2017). Why do people pirate? A neuroimaging investigation. Social Neuroscience, 12(4):366-378. PMID: 27156807, DOI: 10.1080/17470919.2016.1179671. ARTICLE

By Neuronicus, 23 May 2018

No Link Between Mass Shootings & Mental Illness

By Neuronicus, 25 February 2018

On Valentine’s Day another horrifying school mass shooting happened in USA, leaving 17 people dead. Just like after the other mass shootings, a lot of people – from media to bystanders, from gun lovers to gun critics, from parents to grandparents, from police to politicians – talk about the link between mental illness and mass shootings. As one with advanced degrees in both psychology and neuroscience, I am tired to explain over and over again that there is no significant link between the two! Mass shootings happen because an angry person has had – or made to think they had – enough sorrow, stress, rejection and/or disappointment and HAS ACCESS TO A MASS KILLING WEAPON. Yeah, I needed the caps. Sometimes scientists too need to shout to be heard.

So here is the abstract of a book chapter called straightforwardly “Mass Shootings and Mental Illness”. The entire text is available at the links in the reference below.

From Knoll & Annas (2015):

“Common Misperceptions

- Mass shootings by people with serious mental illness represent the most significant relationship between gun violence and mental illness.

- People with serious mental illness should be considered dangerous.

- Gun laws focusing on people with mental illness or with a psychiatric diagnosis can effectively prevent mass shootings.

- Gun laws focusing on people with mental illness or a psychiatric diagnosis are reasonable, even if they add to the stigma already associated with mental illness.

Evidence-Based Facts

- Mass shootings by people with serious mental illness represent less than 1% of all yearly gun-related homicides. In contrast, deaths by suicide using firearms account for the majority of yearly gun-related deaths.

- The overall contribution of people with serious mental illness to violent crimes is only about 3%. When these crimes are examined in detail, an even smaller percentage of them are found to involve firearms.

- Laws intended to reduce gun violence that focus on a population representing less than 3% of all gun violence will be extremely low yield, ineffective, and wasteful of scarce resources. Perpetrators of mass shootings are unlikely to have a history of involuntary psychiatric hospitalization. Thus, databases intended to restrict access to guns and established by guns laws that broadly target people with mental illness will not capture this group of individuals.

- Gun restriction laws focusing on people with mental illness perpetuate the myth that mental illness leads to violence, as well as the misperception that gun violence and mental illness are strongly linked. Stigma represents a major barrier to access and treatment of mental illness, which in turn increases the public health burden”.

REFERENCE: Knoll, James L. & Annas, George D. (2015). Mass Shootings and Mental Illness. In book: Gun Violence and Mental Illness, Edition: 1st, Chapter: 4, Publisher: American Psychiatric Publishing, Editors: Liza H. Gold, Robert I. Simon. ISBN-10: 1585624985, ISBN-13: 978-1585624980. FULLTEXT PDF via ResearchGate | FULLTEXT PDF via Psychiatry Online

The book chapter is not a peer-reviewed document, even if both authors are Professors of Psychiatry. To quiet putative voices raising concerns about that, here is a peer-reviewed paper with open access that says basically the same thing: